Oracle Chief Targets AI Inference as Next Multi-Trillion Dollar Opportunity

Oracle co-founder Larry Ellison has identified artificial intelligence inference as the next major frontier in computing, projecting the market could reach multi-trillion dollar valuations as the technology moves beyond training models to deploying them across diverse industries.

Ellison’s comments signal a strategic shift in focus within the AI industry, where much of the attention and investment has centered on training large language models and generative AI systems. Inference, the process of using trained AI models to make predictions and decisions in real-world applications, represents the practical deployment phase that could deliver widespread economic value.

The Path From Training to Inference

While AI model training has dominated headlines and captured significant computing resources, inference operations occur continuously once models are deployed. Every query to a chatbot, every recommendation algorithm, and every autonomous driving decision involves inference computing. The cumulative demand for these operations across billions of devices and applications creates sustained, large-scale infrastructure requirements.

Oracle’s strategic positioning reflects growing industry recognition that inference workloads will ultimately dwarf training operations in both volume and economic impact. As organizations move from experimental AI projects to production deployments, the computational demands shift from one-time training exercises to continuous inference operations serving millions of users.

Applications Across Critical Industries

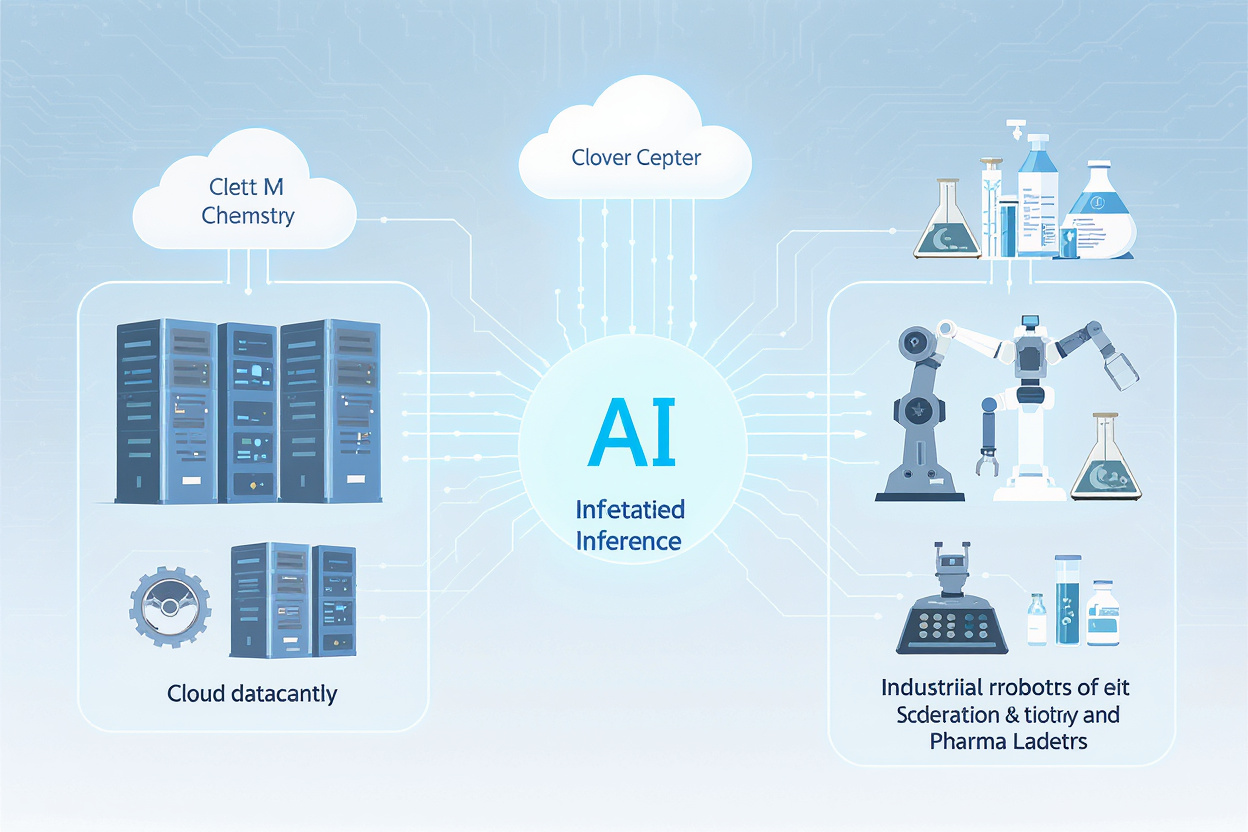

Ellison specifically highlighted robotics and drug design as fields where AI inference shows transformative potential. In pharmaceutical development, inference-powered systems can rapidly evaluate molecular structures and predict drug interactions, potentially accelerating the timeline from discovery to clinical trials. Robotics applications require real-time inference processing to enable machines to perceive environments, make decisions, and execute tasks autonomously.

These applications demand different infrastructure characteristics than training operations. Inference workloads prioritize low latency, high availability, and cost efficiency for sustained operations rather than the raw computational power needed for training massive models.

Cloud Infrastructure Implications

Oracle’s emphasis on inference aligns with the company’s cloud infrastructure business, where specialized computing resources optimized for inference workloads could differentiate offerings in an increasingly competitive market. The inference market opportunity extends beyond hyperscale cloud providers to edge computing environments, where processing occurs closer to data sources and end users.

The distributed nature of inference computing creates opportunities for cloud providers who can deliver efficient, scalable infrastructure across geographic regions and deployment environments. Organizations deploying AI applications require infrastructure that can handle variable workloads while maintaining performance standards and managing costs.

Market Dynamics and Competition

Major technology companies have already begun positioning for the inference market. Semiconductor manufacturers are developing chips specifically optimized for inference operations, offering improved performance per watt compared to general-purpose processors. Cloud providers are expanding specialized instance types and services designed for inference workloads.

The multi-trillion dollar market projection suggests Ellison anticipates AI inference becoming embedded across virtually every sector of the economy. From financial services processing transactions to healthcare systems analyzing medical images, inference operations would become fundamental computing infrastructure comparable to current database and networking technologies.

Industry analysts note that realizing this market potential depends on continued AI adoption beyond early-stage deployments. Organizations must successfully transition pilot projects into production systems that deliver measurable business value, justifying sustained infrastructure investment.

Looking Ahead

The inference market timeline remains uncertain, with adoption rates varying significantly across industries and use cases. Regulatory frameworks, data privacy requirements, and integration challenges will influence deployment speeds in different sectors.

Oracle’s strategic focus on inference represents a bet that the AI industry is entering a new phase where operational deployment eclipses research and development as the primary value driver. Success in this market will require infrastructure providers to balance performance, cost efficiency, and reliability while supporting diverse application requirements across industries.

As AI systems move from laboratories to production environments serving billions of users, the infrastructure supporting inference operations could indeed represent one of the largest technology markets in the coming decade.