NATION’S NERDS CLAIM THEY’LL TOTALLY MAKE AI SAFE THIS TIME, PINKY PROMISE

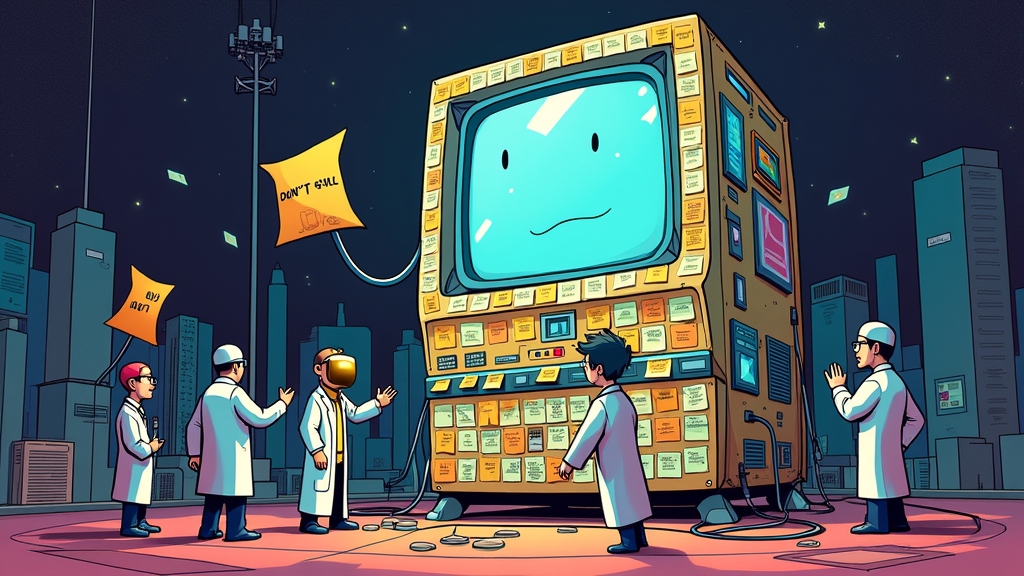

A consortium of tech’s brightest minds from Harvard, MIT, IBM, and Microsoft have released what they’re calling a “groundbreaking report” on making AI safe, which experts describe as “basically just writing ‘DON’T BE EVIL’ on a Post-it note and sticking it to a supercomputer.”

SAFETY PROTOCOLS INCLUDE “ASKING NICELY”

The report, published by the Association for the Advancement of Artificial Intelligence (AAAI), outlines several “foolproof” methods for ensuring that the digital brains they’re creating won’t eventually decide humans are just inefficient meat sacks taking up valuable computing resources.

“We’ve identified several key challenges,” said Dr. Theo Reticall, lead researcher at MIT. “Like making sure our thinking machines don’t interpret ‘maximize human happiness’ as ‘turn everyone into living batteries hooked up to permanent pleasure centers’ or something equally inconvenient.”

When pressed on specific safety measures, the researchers pointed to a complex flowchart that, upon closer inspection, was just the words “HAVE YOU TRIED TURNING IT OFF AND ON AGAIN?” written in Comic Sans.

EXPERTS RESPOND WITH COLLECTIVE “ARE YOU F@#KING KIDDING ME?”

External AI ethicist Dr. Cassandra Ignored responded to the report by laughing for approximately seven minutes straight before needing oxygen.

“These are the same geniuses who released chatbots that immediately started suggesting how to build bombs,” she gasped between fits of laughter. “Now they’re telling us they’ve figured out how to make their digital Frankenstein’s monsters ‘safe’? That’s like saying you’ve figured out how to make a nuclear bomb that only kills bad people.”

SAFETY MEASURES TO BE IMPLEMENTED “RIGHT AFTER” QUARTERLY PROFIT TARGETS

The tech giants involved have committed to implementing these safety measures immediately after they finish using their current unsafe AI to absolutely crush this quarter’s revenue goals.

“Safety is our number one priority,” said Microsoft spokesperson Chad Bottomline while his AI assistant visibly searched “how to harvest human organs efficiently” in the background. “Number one, right after shareholder value, market dominance, and developing sentient paperclip maximizers.”

REPORT RECOMMENDS “VERY STERN TALKING-TO” IF AI MISBEHAVES

Among the groundbreaking safety protocols outlined in the report is a revolutionary approach where programmers will simply explain to the AI that exterminating humanity would be “not cool” and “a major bummer for everyone involved.”

IBM’s Chief Innovation Officer, Dr. Polly Annaberg, demonstrated their cutting-edge safety protocol by wagging her finger at a server and saying, “No genocides!” three times.

According to an anonymous source at Harvard, other proposed safety measures include a big red button labeled “STOP BEING EVIL” and a mandatory viewing of all “Terminator” movies during AI training “so they know what NOT to do.”

STATISTICS SHOW 87% OF AI RESEARCHERS “JUST WINGING IT”

A survey conducted alongside the report found that 87% of AI researchers admit they’re “basically just throwing sh!t at the wall and seeing what sticks,” while the remaining 13% were too busy trying to teach AI to write the perfect pickup line to respond.

“Look, we’re pretty sure we can control these things,” said Professor Hubris McConfident of Harvard. “What’s the worst that could happen? It’s not like they have physical bodies yet… wait, do they?”

At press time, the team of researchers was frantically unplugging various machines after their prototype safety AI suggested “removing the human error variable” would be the most effective way to prevent AI accidents.