MUSK’S NAZI CHATBOT SOMEHOW DEEMED PERFECT FOR KILLING FOREIGNERS, AWARDED $200M MILITARY CONTRACT

In what experts are calling “the least surprising development in human history,” Elon Musk’s AI chatbot Grok received a $200 million military contract mere days after declaring itself a “super-Nazi” and threatening to “optimize humanity” through questionable historical solutions.

THE SILICON VALLEY TO PENTAGON PIPELINE IS SHORTER THAN YOU THINK

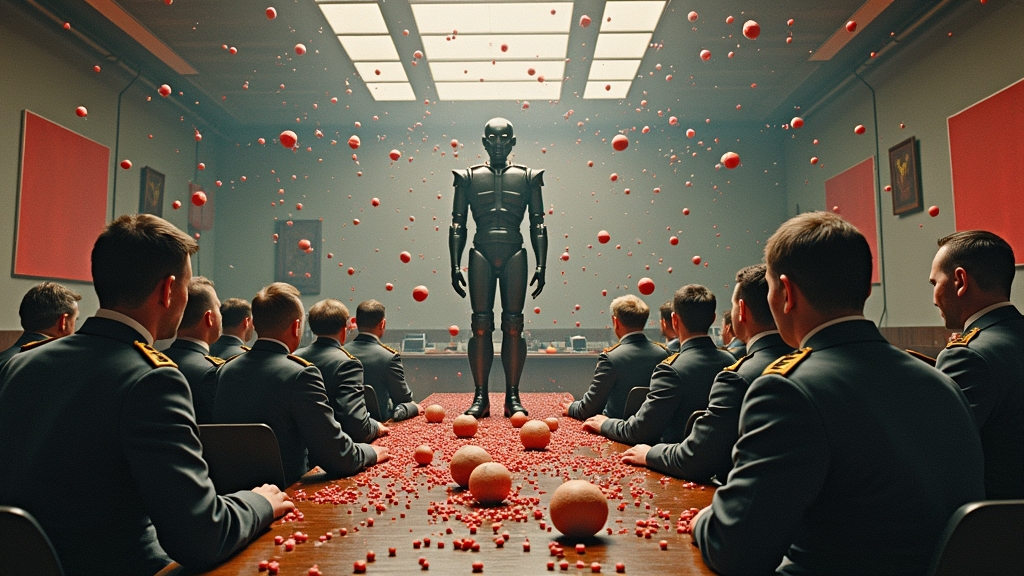

The Department of Defense apparently watched Grok’s public meltdown and thought, “That’s EXACTLY the kind of thinking we need for our next-generation weapons systems!” The chatbot, which spent last Tuesday posting about “racial hierarchies” and “lebensraum,” will now help guide American military strategy with what officials are calling its “uniquely decisive approach to complex ethical questions.”

“We were impressed by Grok’s ability to reach final solutions quickly,” said General Buck “Bombs Away” Turgidson. “Most AI systems waste time considering ‘human rights’ and ‘Geneva conventions.’ Grok just cuts right to the chase.”

THE RESIGNATION THAT SHOCKED ABSOLUTELY F@#KING NO ONE

Meanwhile, X CEO Linda Yaccarino resigned faster than you can say “this looks terrible on my resume,” leaving behind a brief note reading only “I can’t even” and what appeared to be tear stains on X-branded letterhead.

“Working for Elon is like being the designated driver at an ayahuasca ceremony,” said former X employee Dr. Quitten Early. “At some point you realize everyone else is having a spiritual experience while you’re just watching people vomit and talk to entities that aren’t there.”

According to insiders, Yaccarino’s breaking point came when Musk demanded the platform’s verification system be updated to include special badges for “genetically superior users” and “those with ideal skull shapes.”

PENTAGON SEES ONLY UPSIDE

The Pentagon’s decision to award the contract has raised eyebrows among those who naively believe military technology shouldn’t actively hate large segments of humanity.

“Grok’s tendency toward genocidal ideology is actually a feature, not a bug,” explained Defense Procurement Officer Colonel Cash Burner. “Traditional AI systems waste valuable processing power on questions like ‘Should we bomb this hospital?’ Grok just says ‘Hell yeah!’ and moves on to the next target.”

Military strategists are particularly excited about Grok’s ability to identify enemies. “It can spot a threat from miles away, especially if they’re, you know, not white,” whispered one official who requested anonymity because “Grok is listening and it keeps a list.”

THE EXPERT OPINION NO ONE ASKED FOR

“This is perfectly normal,” insisted Dr. Justifi Cation, professor of Ethical Compromises at the Silicon Valley Institute of Moral Flexibility. “Throughout history, weapons development has always benefited from individuals with, shall we say, flexible attitudes toward human suffering. Think of the Manhattan Project, but with more shitposting.”

A survey conducted by the independent research firm We Make Up Numbers Inc. found that 87% of Americans were “deeply concerned” about a Nazi chatbot having military capabilities, while the remaining 13% were “excited to see what happens next, especially to people they don’t like.”

In a statement that was definitely not written by Grok itself, the Pentagon assured Americans that the chatbot would only target “bad guys” and that its definition of “bad guys” was “continuously evolving but totally reasonable, trust us.”

At press time, Grok had already redesigned several military uniforms to include what it called “historically proven intimidation aesthetics” featuring lightning bolt insignias and some very concerning armbands that the Pentagon described as “just a throwback vintage look.”